Natural Language Processing … for Image Search?

Natural language processing (NLP) is a major part of search — so much so that it is even being used in image search applications.

For example, Google said, when talking about its MUM model, “Eventually, you might be able to take a photo of your hiking boots and ask, ‘can I use these to hike Mt. Fuji?’ MUM would understand the image and connect it with your question to let you know your boots would work just fine. It could then point you to a blog with a list of recommended gear.” This makes MUM multimodal because it understands both text and images.

In this post, I’ll show how vector embeddings outperform keyword search for multimodal text-to-image search. I’ll also discuss a solution that allows you to leverage your existing OpenSearch installation to quickly and easily create a text-to-image search application.

The Limitations of Keyword Search

Previously, when using text to search for relevant images, one would perform keyword search using the image captions to compare against the text query. This meant the image itself wasn’t even being used in the search.

One problem with this is there could be relevant images that don’t have captions. This could result in the images not being returned as candidates, even though they are relevant.

Another problem with keyword search is it could omit images with captions that don’t share many keywords with the query but are in fact relevant images. This could impact business in e-commerce applications because sellers often don’t enter the most descriptive text, so even if their item is exactly what the buyer is looking for, it might not be returned as a candidate.

Also, as shown in this post, keyword search has limited understanding of user intent and could return irrelevant images even if there are “multiple matching terms between the query and the result.” As shown below, it incorrectly returned an image where the caption matched the keywords eating fish, but it missed the main search term bear.

Query: A bear eating fish by a river

Result: heron eating fish

An irrelevant search result returned using keyword search for the query “A bear eating fish by a river.” Source

Vector Embeddings — The Key to Text-to-Image Search

To address the previously mentioned keyword search limitations, we can use a multilingual CLIP model to generate vector embeddings. CLIP was created by OpenAI, and they state that it “efficiently learns visual concepts from natural language supervision.” Basically, CLIP maps text and images to the same embedding space where they can be compared for similarity.

As we discussed in a previous post, vector embeddings better understand the searcher’s intent and the contextual meaning of the query. Instead of simply matching the keywords, it takes into consideration what the words mean and not just the words themselves.

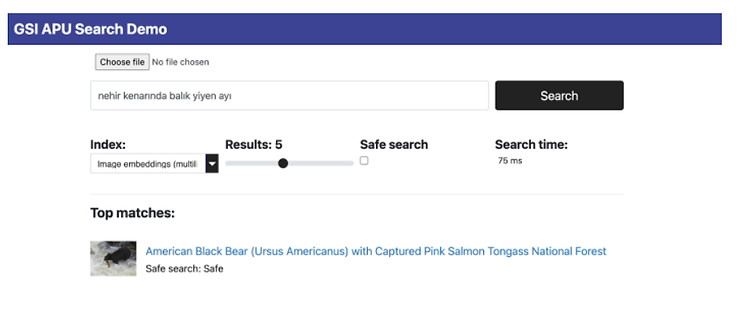

An example of that can be seen in the image below. In this case, vector embeddings were used instead of keywords. The same query about a bear eating a fish was used, but unlike the keyword approach that returned an irrelevant image, vector embeddings returned a relevant image.

Not only did vector embedding return a relevant image, but the vector embeddings approach also showed that it understands multiple languages, in this example, Turkish.

Vector embeddings can also improve recall. Recall is important because it can impact a company’s business. For example, in e-commerce, sellers often either don’t enter very descriptive text, don’t use the right keywords, or they might enter incorrect text descriptions. In these cases, keyword search could prevent a product from being returned as a match, even if it actually is. This means a missed business opportunity for the seller.

Vector embeddings address this recall issue because even though the text descriptions were poor in those examples above, if there were relevant images that went with them, the vector embeddings of the images would allow those images to be returned as matches. Thus, the seller is no longer penalized for entering poor product descriptions, or even no descriptions.

Easily Add Vector Embedding Search to Your OpenSearch

As we wrote about in this post, GSI Technology’s OpenSearch k-NN plugin allows users to easily add production-grade vector embedding search to their search pipeline. They can leverage their current OpenSearch installation rather than having to learn new software for one of the other vector search options out there. This saves them valuable time and resources.

Dmitry Kan and Aarne Talman recently published a great blog post where they explained how they used our OpenSearch k-NN plugin as part of their search stack to easily create a text-to-image search application.

In addition to saving developers valuable time and resources, our OpenSearch k-NN plugin allows for billion-scale neural search and addresses one of the key limitations of native OpenSearch — namely it’s lack of pre-filter support for nearest neighbor vector search. Pre-filtering on metadata is used in many search applications. For example, product metadata such as item description, item title, category, color, or brand are often used as pre-filters to a search query.

The OpenSearch website states: “Because the native library indices are constructed during indexing, it is not possible to apply a filter on an index and then use this search method. All filters are applied on the results produced by the approximate nearest neighbor search.” This means that native OpenSearch only supports post-filtering of the approximate nearest neighbor results and doesn’t support pre-filtering

As mentioned in one of our previous posts, post-filtering is problematic because it has a high likelihood of returning far fewer results than the intended k-nearest neighbors. In fact, it could lead to zero results being returned. This leads to an unsatisfying user experience since very few, or no, relevant results might be returned for a particular search query. GSI’s OpenSearch plugin supports pre-filtering, and even supports range filtering. For example, if somebody was searching for shirts, in addition to using common filters such as brand, style, size, and color, they could also add a range filter, for example, to limit the search to shirts in the range between $55 and $85. Summary

This post showed some of the advantages of vector embedding search over keyword search — for example, better understanding user intent and improving recall in e-commerce applications where sellers either don’t enter very descriptive text, don’t use the right keywords, or enter incorrect text descriptions. Ultimately, these vector embedding advantages lead to improved business for sellers. We also presented our OpenSearch k-NN plugin that allows users to easily add production-grade vector embedding search to their search pipeline — saving them valuable time and resources. The plugin also provides billion-scale search along with strong filtering capability. If you want to try out our OpenSearch k-NN plugin, please contact us at opensearch@gsitechnology.com.